Introducing new feature dataTypes for the PolyMath/DataFrame project

Hello Everyone,

I have been working on the addition of a new feature, DataFrame >>

dataTypes, which briefs us about the data type of columns in dataframes we

work on.

Summarising a dataset is really important during the initial stage of any

Data Science and Machine Learning tasks. Knowing the data type of the

attribute is one major thing to begin with.

I have tried to work with some sample datasets for a clear understanding of

this new feature.

Please go through the following blog post. Any kind of suggestion or

feedback is welcome.

Link to the Post :

https://balaji612141526.wordpress.com/2021/08/06/introducing-new-feature-in-dataframe-project-datatypes/

Previous discussions can be found here :

https://lists.pharo.org/empathy/thread/BFOHPRUU72MDYVTJP3YV2DQ5LAZHXELE and

here :

https://lists.pharo.org/empathy/thread/JZXKXGHSURC3DCDA2NXA7KDWZ2EINAZ5

Cheers

Balaji G

Hi Balaji,

Please go through the following blog post. Any kind of suggestion or

feedback is welcome.

That's a nice description, easy to follow. But there is a missing piece:

how do you actually find the dataType for a series of values? My first

guess was that you are using the same method as Collection >>

commonSuperclass, but then a nil value should yield UndefinedObject, not

Object as in your example.

Cheers

Konrad.

Hi Konrad

Thanks for pointing this out. You are right with how the data types are

calculated, similar to collection >> commonSuperClass. But this time, it is

calculated only during the creation of DataFrame once and for all.

Now coming to the next part, Kindly have a look at the below code.

series := #( nil nil nil ) asDataSeries. series calculateDataType.

"UndefinedObject" .

A nil value, or a Series of nil values yields UndefinedObject as its super

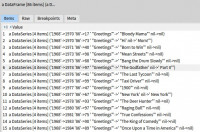

class. There was an error with the dataset used on that example blog post.

I have corrected it now. The problem was that the dataset was not clean,

and had some garbage values of different types in that column. This

resulted in a column, with type Object .

This was the dataset and specifically the last column resulted in this

mistake.

On Sat, Aug 7, 2021 at 9:58 PM Konrad Hinsen konrad.hinsen@fastmail.net

wrote:

Hi Balaji,

Please go through the following blog post. Any kind of suggestion or

feedback is welcome.

That's a nice description, easy to follow. But there is a missing piece:

how do you actually find the dataType for a series of values? My first

guess was that you are using the same method as Collection >>

commonSuperclass, but then a nil value should yield UndefinedObject, not

Object as in your example.

Cheers

Konrad.

I am not quite sure what the point of the datatypes feature is.

x := nil.

aSequence do: [:each |

each ifNotNil: [

x := x ifNil: [each class] ifNotNil: [x commonSuperclassWith: each class]]].

doesn't seem terribly complicated.

My difficulty is that from a statistics/data science perspective,

it doesn't seem terribly useful.

I'm currently reading a book about geostatistics with R (based on a

survey of the Kola

peninsula). For that task, it is ESSENTIAL to know the units in which

the items are

recorded. If Calcium is measured in mg/kg and Caesium is measured in µg/kg,

you really really need to know that. This is not information you can

derive by looking

at the representation of the data in Pharo. Consider for example

- mass of animals in kg

- maximum speed of cars in km/h

- volume of rain in successive dates, in mL (for fixed area)

- directions taken by sand-hoppers released at different times of

day, in degrees - region of space illuminated by light bulbs in steradians.

These might all have the same representation in Pharo, but they are

semantically

very different. 1 and 2 are linear, but cannot be negative. 3 also

cannot be negative,

but the variable is a time series, which 1 and 2 are not. 4 is a

circular measure,

and taking the usual arithmetic mean or median would be an elementary blunder

producing meaningless answers. 5 is perhaps best viewed as a proportion.

(These are all actual examples, by the way.)

THIS kind of information IS valuable for analysis. The difference

between SmallInteger

and Float64 is nowhere near as interesting.

There's a bunch of weather data that i'm very interested in which has

things like

air temperature, soil temperature, relative humidity, wind speed and

direction (last

5 minutes), gust speed and direction (maximum in last 5 minutes), illumination

in W/m^2 (visible, UVB, UVA), rainfall, and of course date+time.

Temperatures are measured on an interval scale, so dividing them makes no sense.

Nor does adding them. If it's 10C today and 10C tomorrow, nothing is 20C. But

oddly enough arithmetic means DO make sense.

Humidity is bounded between 0 and 100; adding two relative humidities makes no

sense at all. Medians make sense but means do not.

Wind speed and direction are reported as separate variables,

but they are arguably one 2D vector quantity.

Illumination is on a ratio scale. Dividing one illumination by another makes

sense, or would if there were no moonless nights...

The total illumination over a day makes sense.

Rainfall is also on a ratio scale. Dividing the rainfall on one day by that

on another would make sense if only the usual measurement were not 0.

Total rainfall over a day makes sense.

The whole problem a statistician/data scientist faces is that there is important

information you need to know even which basic operations make sense

that has already disappeared by the time Pharo stores it, and cannot be

inferred from the DataFrame. I remember one time I was given a CSV file

with about 50 variables and it took me about 2 weeks to recover this missing

meta-information.

On Sat, 7 Aug 2021 at 04:23, Balaji G gbalaji20002000@gmail.com wrote:

Hello Everyone,

I have been working on the addition of a new feature, DataFrame >> dataTypes, which briefs us about the data type of columns in dataframes we work on.

Summarising a dataset is really important during the initial stage of any Data Science and Machine Learning tasks. Knowing the data type of the attribute is one major thing to begin with.

I have tried to work with some sample datasets for a clear understanding of this new feature.

Please go through the following blog post. Any kind of suggestion or feedback is welcome.

Link to the Post : https://balaji612141526.wordpress.com/2021/08/06/introducing-new-feature-in-dataframe-project-datatypes/

Previous discussions can be found here : https://lists.pharo.org/empathy/thread/BFOHPRUU72MDYVTJP3YV2DQ5LAZHXELE and here : https://lists.pharo.org/empathy/thread/JZXKXGHSURC3DCDA2NXA7KDWZ2EINAZ5

Cheers

Balaji G

Hi Balaji,

Thanks for pointing this out. You are right with how the data types are

calculated, similar to collection >> commonSuperClass. But this time, it is

calculated only during the creation of DataFrame once and for all.

That sounds good.

A nil value, or a Series of nil values yields UndefinedObject as its super

class. There was an error with the dataset used on that example blog post.

I have corrected it now.

OK, that explains my confusion.

Cheers,

Konrad.

"Richard O'Keefe" raoknz@gmail.com writes:

My difficulty is that from a statistics/data science perspective,

it doesn't seem terribly useful.

There are two common use cases in my experience:

-

Error checking, most frequently right after reading in a dataset.

A quick look at the data types of all columns shows if it is coherent

with your expectations. If you have a column called "data" of data

type "Object", then most probably something went wrong with parsing

some date format. -

Type checking for specific operations. For example, you might want to

compute an average over all rows for each numerical column in your

dataset. That's easiest to do by selecting columns of the right data

type.

You are completely right that data type information is not sufficient

for checking for all possible problems, such as unit mismatch. But it

remains a useful tool.

Cheers,

Konrad.

Neither of those use cases actually works.

Consider the following partial class hierarchy from my Smalltalk system:

Object

VectorSpace

Complex

Quaternion

Magnitude

MagnitudeWithAddition

DateAndTime

QuasiArithmetic

Duration

Number

AbstractRationalNumber

Integer

SmallInteger

There is a whole fleet of "numeric" things like Matrix3x3 which have

some arithmetic properties

but which cannot be given a total order consistent with those

properties. Complex is one of them.

It makes less than no sense to make Complex inherit from Magnitude, so

it cannot inherit from

Number, This means that the common superclass of 1 and 1 - 2 i is

Object. Yet it makes perfect

sense to have a column of Gaussian integers some of which have zero

imaginary part.

So "the dataType is Object means there's an error" fails at the first

hurdle. Conversely, the

common superclass of 1 and DateAndTime now is MagnitudeWithAddition,

which is not Object,

but the combination is probably wrong, and the dataType test fails at

the second hurdle.

"You might want to compute an average..." But dataType is no use for

that either, as I was at

pains to explain. If you have a bunch of angles expressed as Numbers,

you can compute an

arithmetic mean of them, but you shouldn't, because that's not how

you compute the

average of circular measures. The obvious algorithm (self sum / self

size) does not work at

all for a collection of DateAndTimes, but the notion of average makes

perfect sense and a

subtly different algorithm works well. (I wrote a technical report

about this, if anyone is interested.)

dataType will tell you you CAN take an average when you cannot or should not.

dataType will tell you you CAN'T take an average when you really honestly can.

The distinctions we need to make are not the distinctions that the

class hierarchy makes.

For example, how about the distinction between ordered factors and

unordered factors?

On Mon, 9 Aug 2021 at 03:03, Konrad Hinsen konrad.hinsen@fastmail.net wrote:

"Richard O'Keefe" raoknz@gmail.com writes:

My difficulty is that from a statistics/data science perspective,

it doesn't seem terribly useful.

There are two common use cases in my experience:

-

Error checking, most frequently right after reading in a dataset.

A quick look at the data types of all columns shows if it is coherent

with your expectations. If you have a column called "data" of data

type "Object", then most probably something went wrong with parsing

some date format. -

Type checking for specific operations. For example, you might want to

compute an average over all rows for each numerical column in your

dataset. That's easiest to do by selecting columns of the right data

type.

You are completely right that data type information is not sufficient

for checking for all possible problems, such as unit mismatch. But it

remains a useful tool.

Cheers,

Konrad.

"Richard O'Keefe" raoknz@gmail.com writes:

Neither of those use cases actually works.

In practice, they do.

Consider the following partial class hierarchy from my Smalltalk system:

That's not what I have to deal with in practice. My data comes in as a

CSV file or a JSON file, i.e. formats with very shallow data

classification. All I can expect to distinguish are strings vs. numbers,

plus a few well-defined subsets of each, such as integers, specific

constants (nil, true, false), or data recognizable by their format, such

as dates.

For internal processing, I might then want to redefine a column's data

type to be something more specific. In particular, I might want to

distinguish between "categorical" (a predefined finite set of string

values) vs. "generic string". But most of the classes in a Smalltalk

hierarchy just never occur in data frames. It's a simple data structure

for simply structured data.

"You might want to compute an average..." But dataType is no use for

that either, as I was at pains to explain. If you have a bunch of

angles expressed as Numbers, you can compute an arithmetic mean of

them, but you shouldn't, because that's not how you compute the

average of circular measures.

I agree. There are many things you shouldn't do for any given dataset

but which no formal structure will prevent you from doing. Data science

is very much about shallow computations, with automation limited to

simple stuff that is then applied to large datasets. Tools like data

type analysis are no more than a help for the data scientist, who is the

ultimate arbiter of what is or is not OK to do.

Think of this as the data equivalent of a spell checker. A spell checker

won't recognize bad grammar, but it's still a useful tool to have.

Cheers,

Konrad.